The Linux wget command is a command-line utility that downloads files from the internet using HTTP, HTTPS, and FTP protocols. It’s designed to work non-interactively, meaning it can run in the background while you’re logged out—making it perfect for retrieving large files, automating downloads in scripts, and handling unreliable network connections. Most Linux distributions include wget by default, but if you need to install it, here’s how to do it across different distros.

Ubuntu/Debian-based distros: (difference between apt and apt-get)

sudo apt install wget

Fedora-based distros:

sudo dnf install wget

Red Hat-based distros:

sudo yum install wget

Arch Linux-based distros:

sudo pacman -S wget

Alpine Linux:

apk add wget

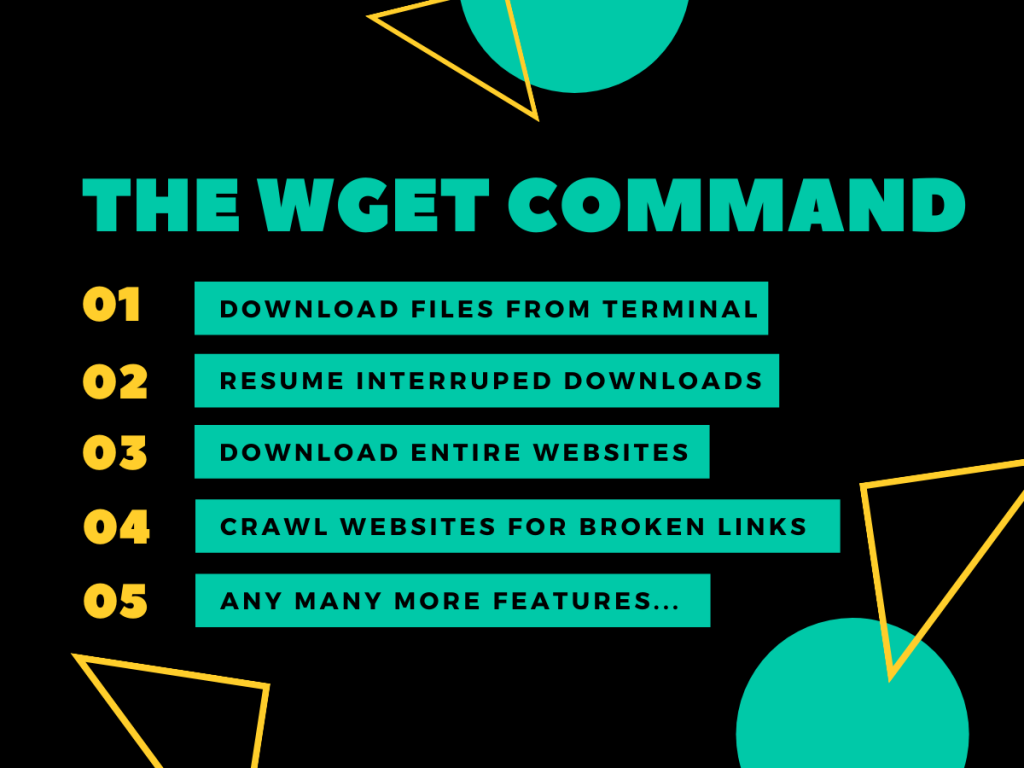

What Makes wget Different from Other Download Tools?

The wget command stands apart from other download utilities because of its robust feature set designed specifically for automation and unreliable networks. Unlike browser-based downloads or simpler tools, wget was built with scripting and unattended operations in mind.

Here are the key capabilities that make wget indispensable for system administrators and developers:

- Runs downloads in the background without requiring an active terminal session

- Automatically resumes interrupted downloads from where they left off

- Creates complete local mirrors of websites for offline browsing

- Crawls through websites to identify broken links and 404 errors

- Controls bandwidth usage to prevent overwhelming your network connection

- Handles authentication for password-protected resources

- Downloads multiple files from a list with a single command

The basic syntax of the wget command is:

wget [options] <URL>

How Do I Download Files with wget?

The simplest way to use wget is to provide it with a URL. Let’s download the Ubuntu Server ISO as a practical example. This demonstrates how wget handles large file downloads and displays progress information.

wget https://releases.ubuntu.com/22.04/ubuntu-22.04.3-live-server-amd64.iso

When you run this command, wget performs several operations in sequence. First, it resolves the domain name to an IP address. Then it establishes a connection to the server and begins downloading. The output shows you detailed information about the transfer, including the server connection details, file size, download speed, and an estimated time to completion with a progress bar.

By default, wget saves the file with its original filename from the server. But what if you want to save it with a different name? That’s where the -O (capital letter O) option comes in handy.

Saving Downloads with a Custom Filename

The -O option lets you specify exactly what you want to call the downloaded file. This is particularly useful when downloading files with cryptic names or when you want to maintain a specific naming convention in your scripts.

wget https://releases.ubuntu.com/22.04/ubuntu-22.04.3-live-server-amd64.iso -O ubuntu-server.iso

This downloads the Ubuntu ISO but saves it as “ubuntu-server.iso” in your current directory. The -O option is especially valuable when working with dynamically generated URLs that might produce files like “download.php?id=12345” – you can give them meaningful names instead.

Specifying a Different Download Directory

Rather than downloading files to your current directory, you can direct wget to save files in a specific location using the -P option. This is cleaner for organizing downloads and essential when automating downloads in scripts.

wget -P ~/Downloads https://releases.ubuntu.com/22.04/ubuntu-22.04.3-live-server-amd64.iso

This command downloads the ISO directly into your Downloads folder. The directory must exist before running the command, or wget will return an error. You can create the directory first with mkdir -p ~/Downloads if needed.

How Do I Resume an Interrupted Download?

Network interruptions happen. Your WiFi drops, your SSH connection times out, or you accidentally hit Ctrl+C. The -c option (short for “continue”) is what makes wget particularly valuable for large file downloads over unstable connections.

wget -c https://releases.ubuntu.com/22.04/ubuntu-22.04.3-live-server-amd64.iso

Here’s how this works in practice: when you run wget with the -c option, it checks if a partially downloaded file already exists in the current directory. If it finds one, wget contacts the server and requests only the remaining portion of the file, starting from where the previous download stopped. The server sends an HTTP Range header indicating it will resume from byte position X, and the download continues seamlessly.

This is especially valuable when downloading multi-gigabyte files like Linux ISOs, database backups, or video files. Instead of starting over from scratch each time your connection drops, you simply re-run the same wget command with -c and pick up where you left off. The savings in time and bandwidth can be significant, particularly on slower connections or when downloading from geographically distant servers.

How Can I Control wget’s Output Display?

While wget’s detailed output is helpful for interactive use, it becomes noise when running downloads in scripts or cron jobs. The good news is that wget provides several options to control exactly what information it displays.

Completely Quiet Mode

The -q flag stands for “quiet” and suppresses all output completely. This is useful for scripts where you only care about the exit status (0 for success, non-zero for failure).

wget -q <URL>

Non-Verbose Mode

The -nv option provides a middle ground—it turns off verbose output but still displays error messages and completion notices. This is better for logging purposes since you’ll know if something went wrong.

wget -nv <URL>

Showing Only the Progress Bar

Sometimes you want to see download progress without all the verbose server connection details. The --show-progress option combined with -q gives you exactly that—just a clean progress bar.

wget -q --show-progress https://releases.ubuntu.com/22.04/ubuntu-22.04.3-live-server-amd64.iso

If you’re using an older version of wget (pre-1.16), you might need to add --progress=bar:force to ensure the progress bar displays correctly. The combination of these flags is particularly useful in terminal multiplexers like tmux or screen, where you want to monitor progress without cluttering your terminal history.

How Do I Download Multiple Files at Once?

Rather than running wget multiple times, you can download several files in a single command by listing all URLs in a text file. This approach is cleaner, more maintainable, and perfect for automation.

First, create a text file with one URL per line. Let’s call it downloads.txt:

cat > downloads.txt << EOF

https://wordpress.org/latest.tar.gz

https://github.com/docker/compose/releases/download/v2.23.0/docker-compose-linux-x86_64

https://go.dev/dl/go1.21.5.linux-amd64.tar.gz

EOF

Now use the -i option (short for “input file”) to pass this list to wget:

wget -i downloads.txt

wget processes each URL sequentially, downloading them one after another. You can combine this with other options we’ve discussed. For example, wget -i downloads.txt -P ~/Downloads -q --show-progress downloads all files to your Downloads folder while showing only progress bars.

This method is particularly powerful for bulk downloads of software packages, documentation sets, or media files. You can even generate the URL list programmatically with scripts if you need to download files following a pattern.

How Can I Limit Download Speed with wget?

When you’re sharing a network connection or running background downloads, limiting wget’s bandwidth usage prevents it from saturating your connection and affecting other applications. The --limit-rate option gives you precise control over download speed.

Setting Bandwidth Limits

You can express the rate limit in bytes, kilobytes (k suffix), or megabytes (m suffix). Here’s a practical example limiting the download to 500 kilobytes per second:

wget --limit-rate=500k https://releases.ubuntu.com/22.04/ubuntu-22.04.3-live-server-amd64.iso

For slower connections or when you want to be very conservative with bandwidth, you might limit to 100k or even 50k. On faster connections where you just want to leave some bandwidth for other applications, you might set it to 2m or 5m.

Understanding How Rate Limiting Works

The --limit-rate option sets an average bandwidth target rather than a hard ceiling. wget might briefly exceed the specified rate as it fills network buffers, but it will throttle back to maintain the average over time. This approach works better with how TCP/IP networking actually functions.

One limitation: you cannot change the rate limit while a download is in progress. However, you can stop the download (Ctrl+C), then resume it with a different rate limit using -c:

wget -c --limit-rate=200k https://releases.ubuntu.com/22.04/ubuntu-22.04.3-live-server-amd64.iso

This resumes the download from where you left off but now limits the speed to 200 KB/s. This technique is useful when network conditions change—for example, if other people start using your connection during the day, you can pause and resume with a lower rate limit.

How Do I Download from Password-Protected Sites?

Many web servers and FTP sites require authentication before allowing downloads. wget handles HTTP Basic Authentication and FTP authentication through several methods, each with different security trade-offs.

HTTP/HTTPS Authentication

For HTTP or HTTPS sites, use the --http-user and --http-password options:

wget --http-user=myusername --http-password=mypassword https://example.com/protected/file.zip

However, this approach has a security problem: the password appears in your terminal command, in your shell history, and is visible to anyone who runs ps while wget is running. For better security, use the --ask-password option:

wget --http-user=myusername --ask-password https://example.com/protected/file.zip

This prompts you to enter the password interactively, keeping it out of logs and process listings. The password won’t be echoed to the screen as you type it.

FTP Authentication

For FTP downloads, use --ftp-user and --ftp-password:

wget --ftp-user=ftpuser --ftp-password=ftppass ftp://ftp.example.com/files/archive.tar.gz

You can also embed credentials directly in the URL, though this has the same security concerns:

wget ftp://username:password@ftp.example.com/files/archive.tar.gz

Storing Credentials Securely

For automated scripts that need authentication, store credentials in a .wgetrc file in your home directory. This keeps passwords out of your scripts and command history.

cat > ~/.wgetrc << EOF

http_user=myusername

http_password=mypassword

ftp_user=ftpuser

ftp_password=ftppass

EOF

chmod 600 ~/.wgetrc

The chmod 600 command ensures only you can read this file. Now wget will automatically use these credentials without needing them in your commands. For sensitive production environments, consider using more sophisticated secrets management tools rather than storing passwords in plain text files.

Can I Mirror an Entire Website with wget?

One of wget’s most powerful features is its ability to recursively download entire websites, creating local copies suitable for offline browsing. This is invaluable for archiving content, creating offline documentation mirrors, or analyzing website structure.

Basic Website Mirroring

The -m option (short for “mirror”) enables recursive downloading. Combined with other options, you can create a complete, browsable local copy:

wget -m -p -k -E https://example.com

Let me break down what each option does:

-m(mirror): Enables recursive downloading with infinite recursion depth, timestamps preservation, and other settings optimal for mirroring-p(page-requisites): Downloads all files necessary to properly display HTML pages, including images, CSS files, and JavaScript-k(convert-links): Converts links in downloaded HTML files to point to local files instead of the original URLs, making the site browsable offline-E(adjust-extension): Adds proper file extensions like .html to files that don’t have them, which helps local web browsers render them correctly

Controlling Mirror Depth and Scope

Without constraints, wget will follow every link it finds, potentially downloading far more than you intended. Use these options to control the scope:

wget -m -p -k -E -l 3 --no-parent https://example.com/documentation/

The -l 3 option limits recursion to three levels deep from the starting URL. The --no-parent option prevents wget from following links to parent directories, keeping your mirror focused on the specified path. This is essential when you only want to mirror a section of a site, like documentation, without grabbing the entire domain.

Important warning: Be cautious when mirroring large sites. A site with thousands of pages can take hours to download and consume significant disk space. Always check robots.txt and respect the website’s terms of service. Many sites explicitly prohibit automated scraping. Use the --wait option to add delays between requests to avoid overwhelming servers:

wget -m -p -k -E --wait=2 https://example.com

This adds a 2-second wait between each request, making your mirror operation much more respectful to the server.

How Can I Find Broken Links on a Website?

Website owners and developers need to identify broken links (404 errors) across their sites. wget’s --spider mode was designed specifically for this purpose—it crawls through links without actually downloading content, checking only if resources exist.

Running a Link Check

The --spider option combined with recursive crawling checks every link on a site:

wget --spider -r -l 5 https://yourwebsite.com -o link-check.log

Here’s what’s happening:

--spider: Enables spider mode—wget only checks if files exist without downloading them-r: Enables recursive link following-l 5: Limits recursion depth to 5 levels to keep the check manageable-o link-check.log: Saves all output to a log file for later analysis

Analyzing the Results

After the spider completes, search the log file for 404 errors:

grep -B 2 '404' link-check.log | grep 'http' | cut -d ' ' -f 4 | sort -u

This pipeline extracts just the URLs that returned 404 errors, sorted and deduplicated. You’ll get a clean list of broken links to fix. The -B 2 flag shows two lines before each 404 match, giving you context about which page contains the broken link.

For larger sites, consider adding --no-parent to restrict the spider to specific sections, and use --wait=1 to avoid hammering your server with rapid-fire requests.

How Do I Run Downloads in the Background?

For long-running downloads, keeping your terminal open is impractical. The -b option sends wget to the background, letting you close your terminal or continue working while the download proceeds.

wget -b https://releases.ubuntu.com/22.04/ubuntu-22.04.3-live-server-amd64.iso

When you run this command, wget immediately returns control to your terminal and displays a message like “Continuing in background, pid 12345.” The process ID (12345 in this example) is important—you can use it to check the download status or stop it if needed.

Monitoring Background Downloads

wget automatically logs background download progress to a file named wget-log in the current directory. Monitor it in real-time with:

tail -f wget-log

You can also specify a custom log file location:

wget -b -o ~/downloads/ubuntu.log https://releases.ubuntu.com/22.04/ubuntu-22.04.3-live-server-amd64.iso

To stop a background download, use the kill command with the process ID:

kill 12345

Background downloads are particularly useful in combination with tools like screen or tmux, which let you start downloads over SSH and safely disconnect without interrupting them.

How Do I Handle HTTPS Certificate Errors?

Sometimes wget refuses to download from HTTPS sites due to certificate validation issues. While you should generally fix the underlying certificate problem, there are legitimate cases where you might need to bypass these checks—like when dealing with self-signed certificates in development environments.

wget --no-check-certificate https://self-signed.example.com/file.zip

The --no-check-certificate option disables SSL certificate validation. Use this cautiously and only when you trust the source, as it makes you vulnerable to man-in-the-middle attacks. For production environments, always fix certificate issues properly rather than bypassing validation.

Advanced wget Command Techniques Worth Knowing

Let me show you a couple of advanced techniques that may come handy when you start using the wget command regularly.

Downloading Files with Timestamping

The -N option enables timestamping, which only downloads files if they’re newer than your local copy. This is perfect for keeping local mirrors synchronized:

wget -N https://example.com/daily-data.csv

If the remote file hasn’t changed since your last download, wget skips it entirely. This saves bandwidth and time when running regular sync jobs.

Using wget with Retries

For unreliable connections, increase the retry count and add wait time between attempts:

wget --tries=10 --retry-connrefused --waitretry=5 https://unstable-server.com/file.tar.gz

This attempts the download up to 10 times, waits 5 seconds between retries, and even retries when the server explicitly refuses connections.

Downloading with Custom User-Agent Strings

Some servers block wget’s default user-agent. Impersonate a browser if necessary:

wget --user-agent="Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36" https://example.com/file.pdf

This makes wget identify itself as a Chrome browser on Windows, bypassing basic user-agent filters.

Conclusion

The Linux wget command transforms from a simple download tool into a sophisticated file transfer utility when you understand its full capabilities. Whether you’re automating downloads in scripts, mirroring entire websites, managing bandwidth-constrained connections, or handling authenticated resources, wget provides the reliability and flexibility needed for production environments.

The key to mastering wget is understanding how to combine options effectively. Rate limiting with resume capabilities, background downloads with authentication, recursive mirroring with depth controls—these combinations handle virtually any download scenario you’ll encounter.

For deeper exploration, the man command in Linux provides comprehensive documentation: man wget. You can also use wget --help for a quick reference of available options.

What is the Linux wget command used for?

The wget command in Linux is a non-interactive command-line utility used to download files from web servers. It supports HTTP, HTTPS, and FTP protocols and can work in the background, making it ideal for automated downloads, scripts, and handling unreliable network connections.

How can I use wget to download files?

To download a file with wget, simply provide the URL: wget https://example.com/file.zip. You can save it with a different name using wget -O newname.zip https://example.com/file.zip or specify a different directory with wget -P ~/Downloads https://example.com/file.zip.

Can wget download multiple files at once?

Yes, wget can download multiple files by reading URLs from a text file using the -i option: wget -i urllist.txt. Create a text file with one URL per line, and wget will download each file sequentially.

How do I limit the download speed with wget?

Use the –limit-rate option to control download speed. For example, wget --limit-rate=500k https://example.com/file.iso limits the download to 500 kilobytes per second. You can specify rates in bytes, kilobytes (k), or megabytes (m).

How can I specify the directory where wget should save the downloaded files?

Use the -P option followed by the directory path: wget -P ~/Downloads https://example.com/file.zip. This downloads the file directly into the specified directory. The directory must exist before running the command.

How do I install wget on my system?

Installation varies by distribution. On Ubuntu/Debian, use sudo apt install wget. On Fedora, use sudo dnf install wget. On RHEL/CentOS, use sudo yum install wget. On Arch Linux, use sudo pacman -S wget.

How do I resume an interrupted download with wget?

Use the -c option (continue) to resume an interrupted download: wget -c https://example.com/largefile.iso. wget checks if a partially downloaded file exists and resumes from where it stopped, saving bandwidth and time.

What does the message “http request sent, awaiting response” mean when using wget?

This message indicates that wget has successfully sent an HTTP request to the server and is waiting for the server’s response before proceeding with the download. It’s a normal part of the connection process.

How do I download from password-protected websites with wget?

For HTTP/HTTPS sites, use wget --http-user=username --ask-password https://example.com/file.zip. For FTP sites, use wget --ftp-user=username --ftp-password=password ftp://example.com/file.tar.gz. The –ask-password option prompts for the password securely without storing it in your command history.

Can wget mirror entire websites for offline viewing?

Yes, use wget -m -p -k -E https://example.com to create a complete local mirror. The -m option enables mirroring mode, -p downloads page requisites (images, CSS, JS), -k converts links for offline browsing, and -E adds proper file extensions. Add –wait=2 to be respectful to servers.