This article will discuss how to download a file on Ubuntu Linux using the command line. We will be covering four different methods that can be used to accomplish this task: wget, cURL, w3m, and elinks. Each method has its benefits and drawbacks that will be covered in detail.

1. Downloading Files using cURL

cURL is a command line tool that can be used to download files from the internet. It is very similar to Wget and supports multiple protocols such as HTTP, HTTPS, and FTP. Additionally, cURL also allows users to upload files on the internet which cannot be done by Wget.

However, one of its major drawbacks is that it does not have any inbuilt features for recursive downloading which mean that if you want to download all the files from a website, you will have to do it one file at a time.

The Benefits of cURL

There are many benefits to downloading files using the command line instead of a graphical user interface (GUI). For one, it is often faster and more efficient. It can also be scripted or automated, saving time if you need to download multiple files. Finally, some people simply prefer working in the terminal.

For more details, read: How to download a file using cURL on Linux?

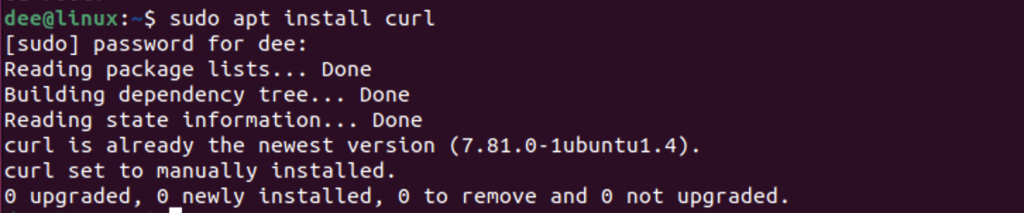

How to Install cURL?

Like Wget, cURL also comes pre-installed with many Linux distributions but in case it isn’t installed on your system then open up Ubuntu Dash or simply press Ctrl+Alt+T shortcut key and enter the following command:

$ sudo apt install curl

And if you are using Fedora then type in this command into Terminal:

$ yum install curl

Features of cURL

cURL provides users with various features some of which have been discussed below:

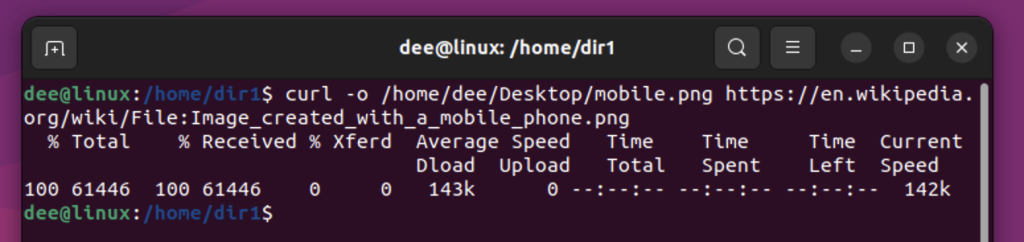

1) By default, cURL saves all the downloaded files into the current working directory but this behavior can be changed by providing a specific output directory using -o option followed by the desired path like this:

$ curl -o /path/to/directory URL

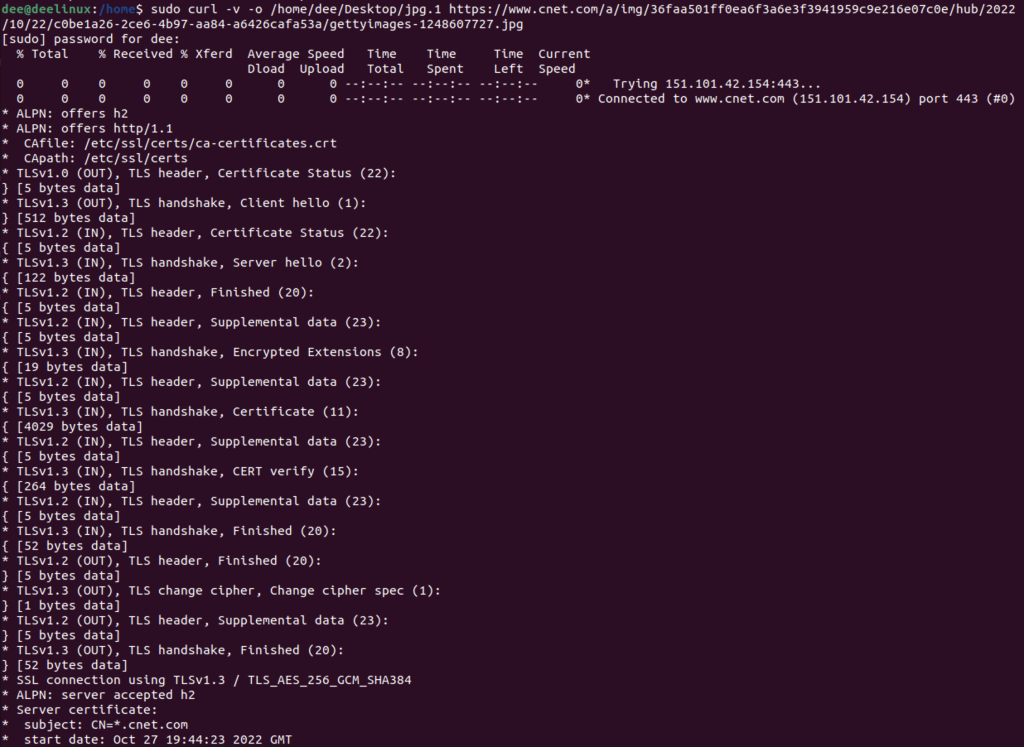

2) If we want more information regarding what’s going on during the transfer, then we can use the –v option like this:

$ curl –v URL

the -v just increases the verbosity so you get to see even more information regarding the transfer that is taking place.

3) Another interesting feature provided by cURL allows us to block IP addresses from where we don’t want traffic coming in.

We call it limiting the maximum number of simultaneous connections per IP address and it prevents server overloads caused by malicious scripts or crawlers making too many requests at once.

$ curl --limit-rate 200k --max-redirs 0 http://example.com

4) And lastly, if someone wants header information along with file content then they need not go anywhere else because cURL provides us with –I option to do exactly the same thing

$ curl –I URL

For further details regarding other available options and usage syntaxes, users can access man pages related to cURL by entering the following command into the terminal:

$ man curl

2. Downloading Files using wget

Wget is another command line tool that can be used for downloading files from the internet. It is very popular among Linux users and has been around for a long time. Wget supports multiple protocols such as HTTP, HTTPS, and FTP. One of its major benefits over cURL is that it can perform recursive downloads which means that it can download an entire website with all its files and sub-directories in just one go.

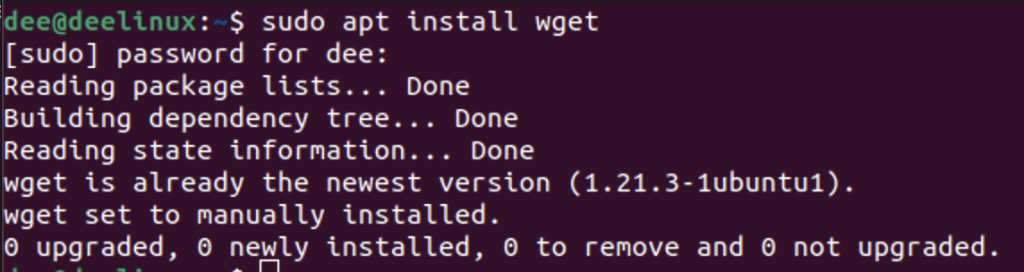

How to Install wget?

Like cURL, Wget also comes pre-installed with many Linux distributions but if it isn’t installed on your system then open up Ubuntu Dash or simply press the Ctrl+Alt+T shortcut key and enter the following command:

$ sudo apt install wget

And if you are using Fedora then type in this command into Terminal:

$ yum install wget

It is important to note that these installation commands are for Debian-based systems only such as Ubuntu while for Red Hat systems like Fedora; we use different commands.

Features of wget

wget command provides users with various features some of which have been discussed below:

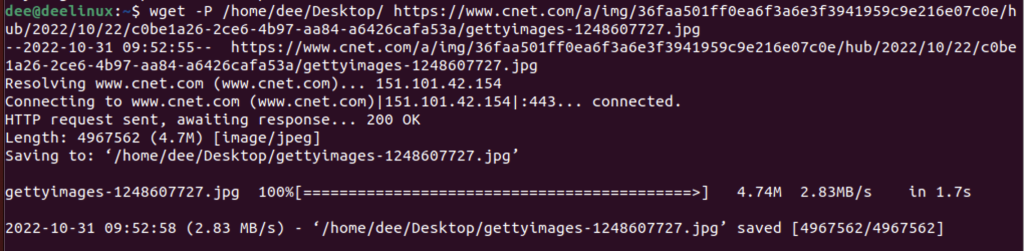

1) Like cURL, by default, wGet saves all the downloaded files into the current working directory but this behavior can be changed by providing a specific output directory using the -P option followed by the desired path like this:

$ wget -P /path/to/directory URL

2) We can also change the name of the file being downloaded through the –output-document option like this:

$wget --output-document=filename URL

Here filename refers to the desired name given to the file being downloaded. This option will automatically detect the type of file being downloaded and add an appropriate extension i.e .jpg,.png, etc.

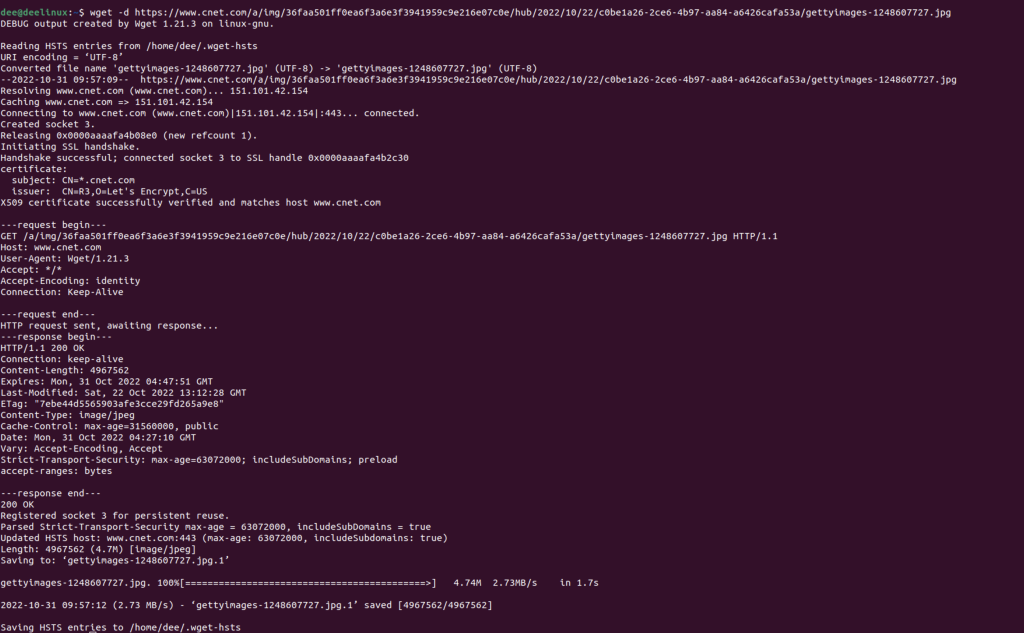

3) If we want more information regarding what’s going on during the transfer then we can use –d or –debug options like this:

$wget –d URL

OR

$wget –debug URL

4) Another interesting feature provided by Wget allows us to block IP addresses from where we don’t want traffic coming in. We call it limiting the maximum number of simultaneous connections per IP address and it prevents server overloads caused by malicious scripts or crawlers making too many requests at once

$wget --limit-rate 200k http://example.com

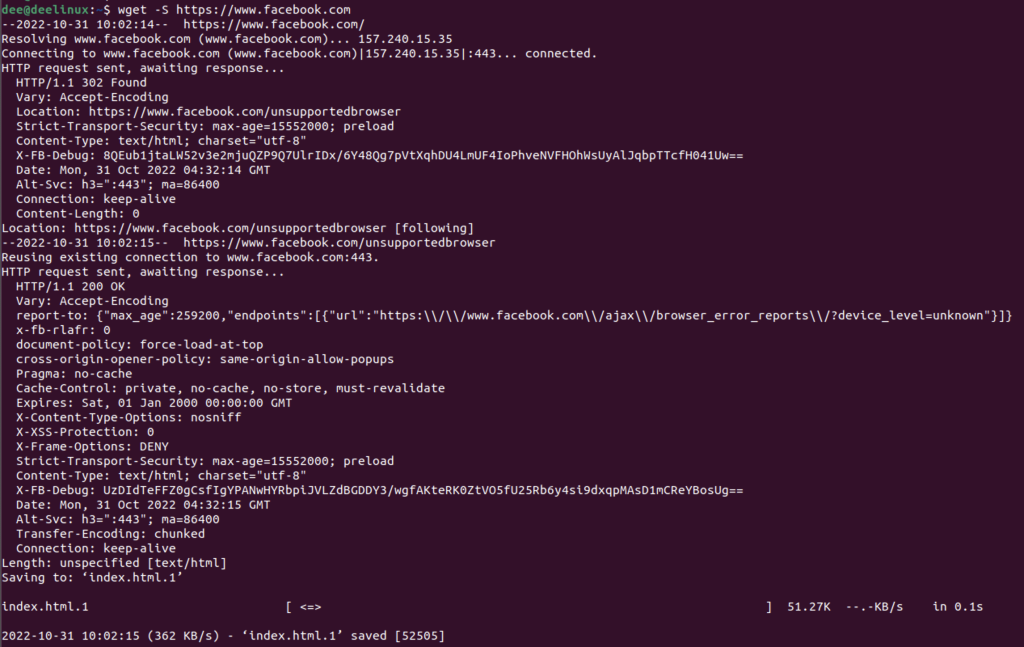

5) And lastly, if someone wants header information along with file content then they need not go anywhere else because WGET provides us with -S or –server-response options doing the exact same thing

$wget -S URL

OR

$wget –server-response URL

For further details regarding other available options and usage syntaxes, users could access man pages related to WGET by entering the following command into the terminal:

$man wget

Or else you could visit the official website https://www.gnu.org/software/wget/.

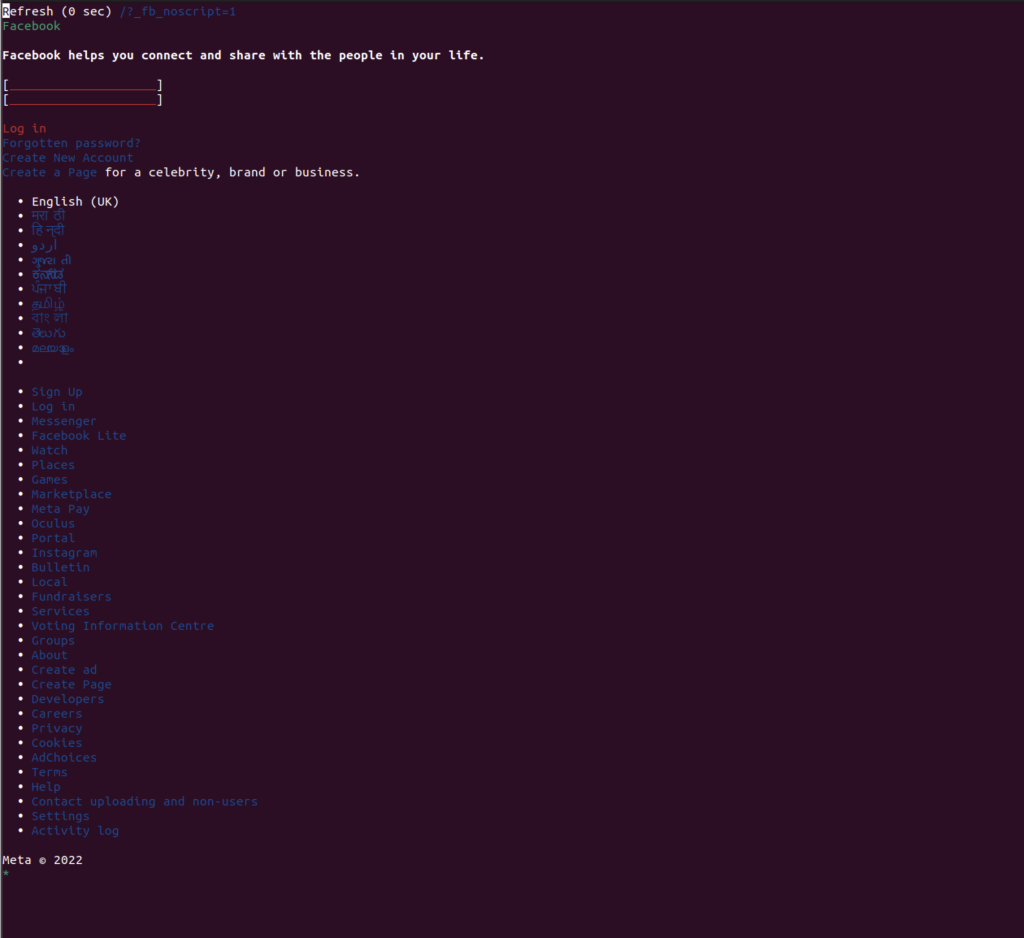

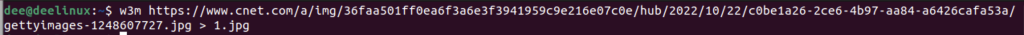

3. Downloading Files Using w3m

w3m is a text-based web browser that can be used to download files from the internet. It supports multiple protocols such as HTTP, HTTPS, and FTP. Additionally, it also has features for viewing images which can come in handy while downloading image files.

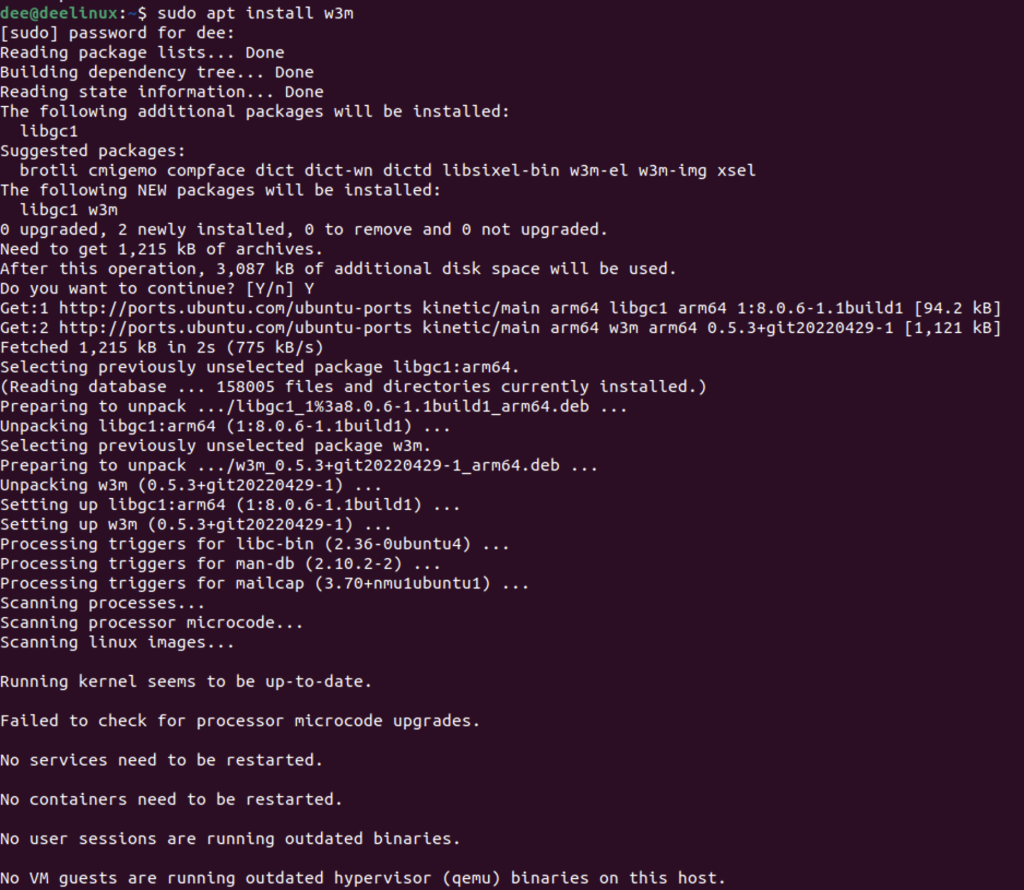

How to Install w3m?

w3m usually comes pre-installed with most of the Linux distributions but if you don’t have it installed on your system then open up Ubuntu Dash or simply press the Ctrl+Alt+T shortcut key and enter the following command:

sudo apt install w3m

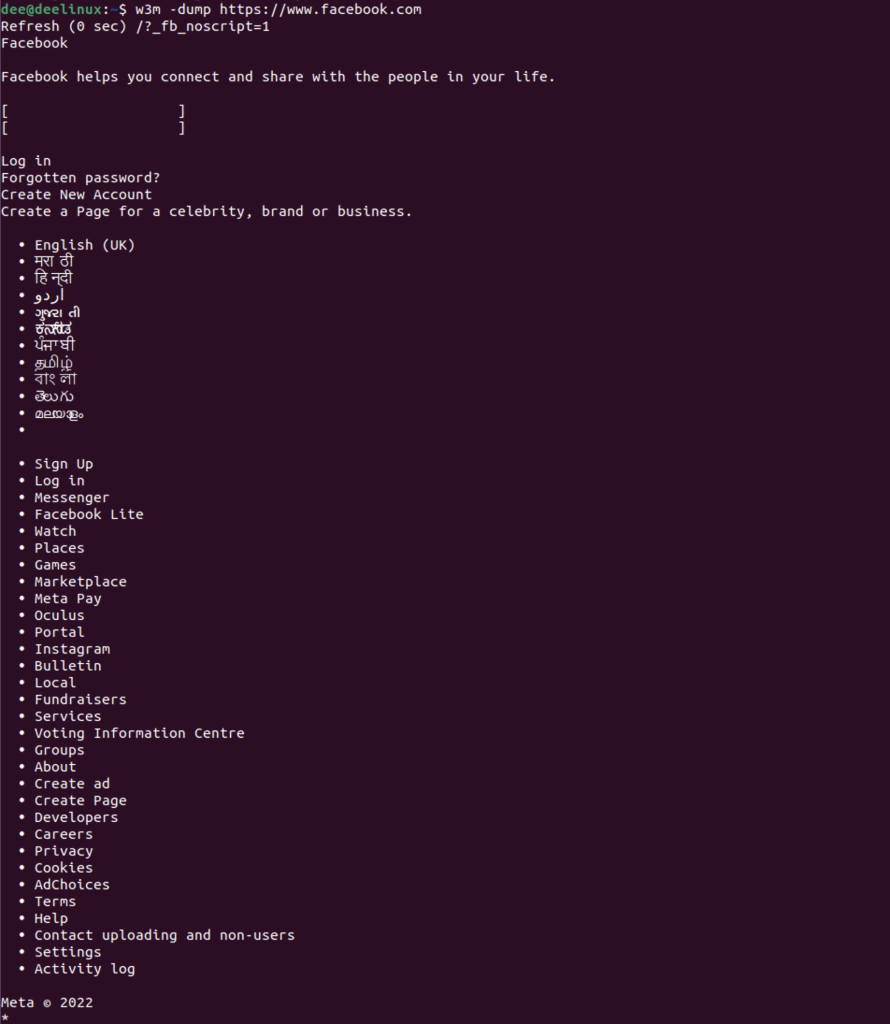

Features of w3m

As mentioned before, one of its major features is that it allows us to dump all the links present on a webpage which can be very useful sometimes. Other than that, it provides us options for different types of output formats such as HTML(-html), PostScript(-ps) etc.

- We can select desired output format through -O option like this:

w3m -O postscript URL

- We could also save all the downloaded content into a file through > operator like this:

$ w3m URL > filename

- It does not have any inbuilt features for recursive downloading but it does allow users to download all the links present on a webpage by providing the -dump option followed by the URL like this:

$ w3m -dump URL

For further details regarding other available options and usage syntaxes, users can access man pages related to Wget by entering the following command into the terminal:

man w3m

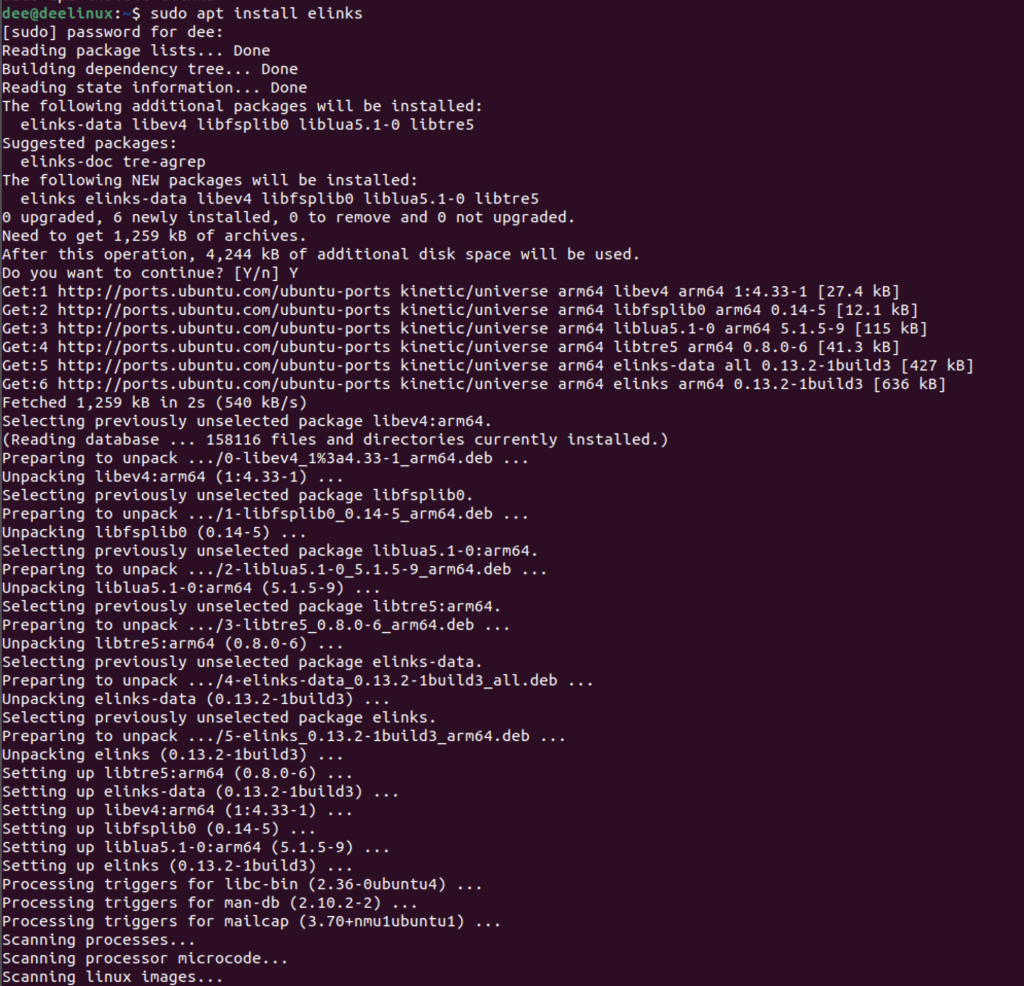

IV. Downloading Files Using elinks

elinks is yet another text-based web browser that supports multiple protocols such as HTTP, HTTPS, and FTP just like Wget and cURL. However, what sets elinks apart from these two is that it comes with an inbuilt feature for recursive downloading which means we can easily download all the files from a website without having to do it one file at a time.

Installation of elinks

We can install elink using the apt command like so,

sudo apt install elinks

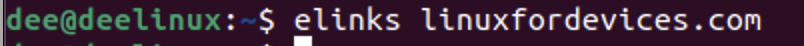

Interacting with elinks

elinks downloads the entire website directory to be displayed in the terminal.

by using the following command you can easily open any website,

elinks <URL>

Here in our case,

For further details regarding other available options and usage syntaxes, users can access man pages related to elinks by entering the following command into the terminal:

man elinks

Conclusion

In conclusion, there are many ways to download a file on Ubuntu Linux using the command line. Each method has its own benefits and drawbacks that should be considered before choosing one.